Technical Profile

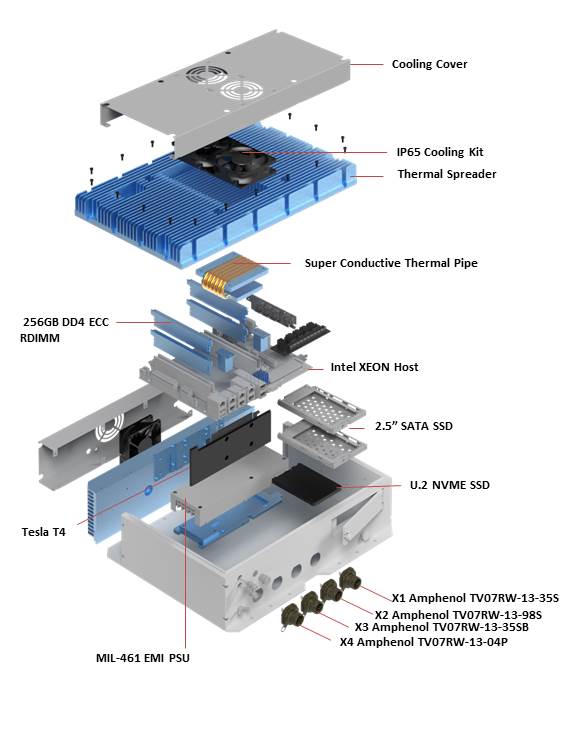

AV800 is 7StarLake ruggedized AI inference platform specifically designed for NVIDIA® Tesla T4 and supports Intel® Xeon® Skylake- D D-2183IT Processor. Utilizing 7StarLake’ Open, Modular, Scalable Architecture, AV800 provide optimized cooling solution for Tesla T4, ensure the stable system operation in harsh environments. In addition to Tesla T4, AV800 provides one M.2 NVMe slot for fast storage access. Combining stunning inference performance, powerful CPU and expansion capability, it is the perfect ruggedized platform for versatile edge AI applications.

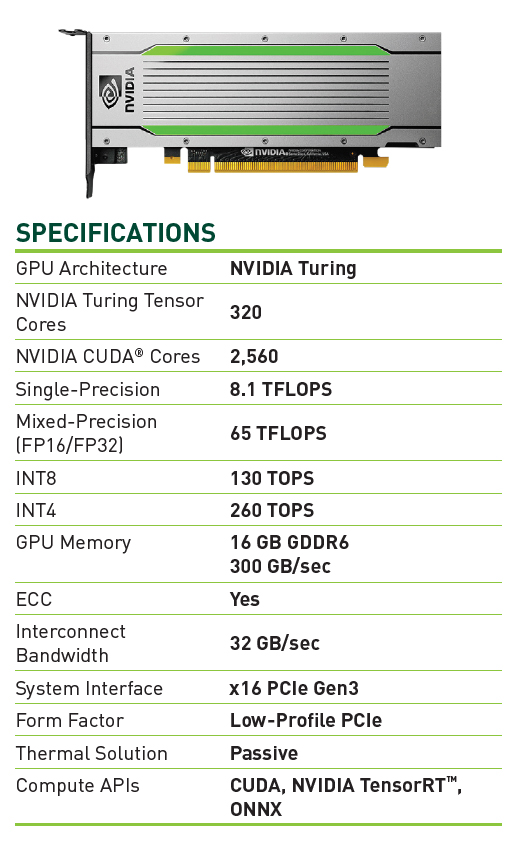

AV800 ruggedized AI inference platforms designed for advanced inference acceleration applications such as voice, video, image and recommendation services. It supports NVIDIA® Tesla T4 GPU, featuring 8.1 TFLOPS in FP32 and 130 TOPs in INT8 for real-time inference based on trained neural network model.

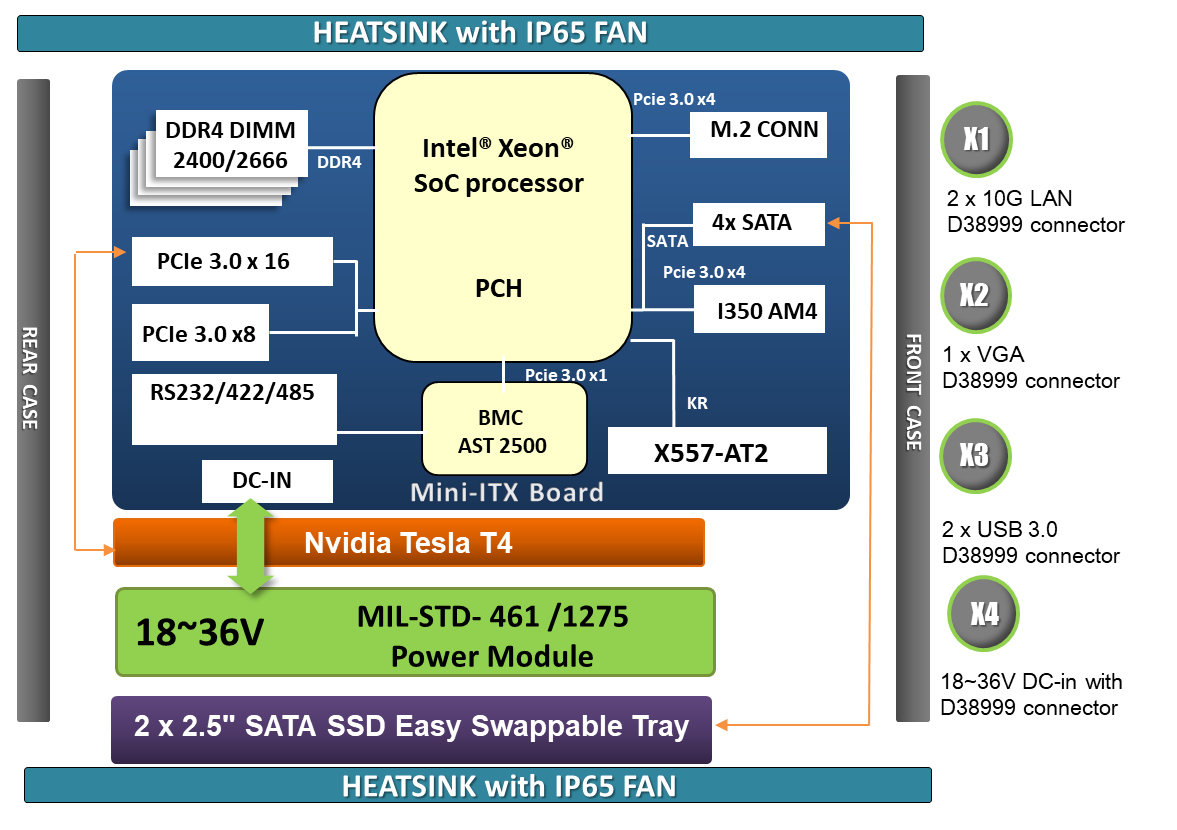

IT Block Diagram

Features

Computing Features

I/O and Expansion Options

Environment

Operates up to extended temp -20°C to +55°C

Advanced thermal solution

From internal conduction cooling system with copper heat pipes dissipating the CPU GPU main heat to external dual-sided aluminum heat sink, furthermore with system fan of active cooling control and power fan for turbo operation, are all the solution designed to guarantee superior system performance and stable operation under critical environment.

Edge AI Inference, NVIDIA Tesla T4 & INTEL XEON D2187NT

AV800 is 7StarLake ruggedized AI inference platform specifically designed for NVIDIA® Tesla T4 and supports Intel® XEON Skylake DE processor. Utilizing 7StarLake‘ Open, Modular, Scalable Architecture, AV800 provide optimized cooling solution for Tesla T4, ensure the stable system operation in harsh environments. In addition to Tesla T4, AV800 provides one M.2 NVMe slot for fast storage access. Combining stunning inference performance, powerful CPU and expansion capability, it is the perfect ruggedized platform for versatile edge AI applications.

AV800 ruggedized AI inference platforms designed for advanced inference acceleration applications such as voice, video, image and recommendation services. It supports NVIDIA® Tesla T4 GPU, featuring 8.1 TFLOPS in FP32 and 130 TOPs in INT8 for real-time inference based on trained neural network model.

Skylake D : The Intel® Xeon® processor D-2183IT product family is Intel’s 64-bit system on a chip (SOC) and the first Intel® Xeon® SoC based on Intel® 14 nm silicon technology. This lineup offers hardware and software scalability from two up to sixteen cores, making it the perfect choice for a broad range of high-performing, low-power solutions that will bring intelligence and Intel® Xeon® reliability, availability, and serviceability (RAS) to the edge. For applications where space is a premium, an integrated Platform Controller Hub (PCH) technology and Intel® Ethernet in a ball grid array (BGA) package offer an inspiring level of design simplicity. The Intel® Xeon® processor Skylake DE product family is offered with a seven-year extended supply life and 10-year reliability for Internet of Things designs.

Design to Meet MIL-STD 810, MIL-STD461

AV800 is designed to meet strict size, weight, and power (SWaP) requirements and to withstand harsh environments, including temperature extremes, shock/vibe, sand/dust, and salt/fog.

AV800 is MIL-461/1275 EMI/EMC compliant rugged Edge AI Inference server. It passes numerous environmental tests including Temperature, Altitude, Shock, Vibration, Voltage Spikes, Electrostatic Discharge and more. The sealed compact chassis shields circuit cards from external environmental conditions such as sand, dust, and humidity.