Technical Profile

Artificial Intelligence (AI) and Machine Learning (ML) as the Backbone of the Success of Driverless Vehicles

With the rise of advanced technology, the appearances of cutting-edge vehicles have been sprung up recently. Out of all the burgeoning vehicles, driverless vehicles have gone viral the most. However, the road to the success is not always effortless for driverless vehicles; it requires the support of lots of technologies. Among these technologies, Artificial Intelligence (AI) and Machine Learning (ML) is the backbone of the success of driverless vehicles.

Safety is Crucial for Driverless Vehicles

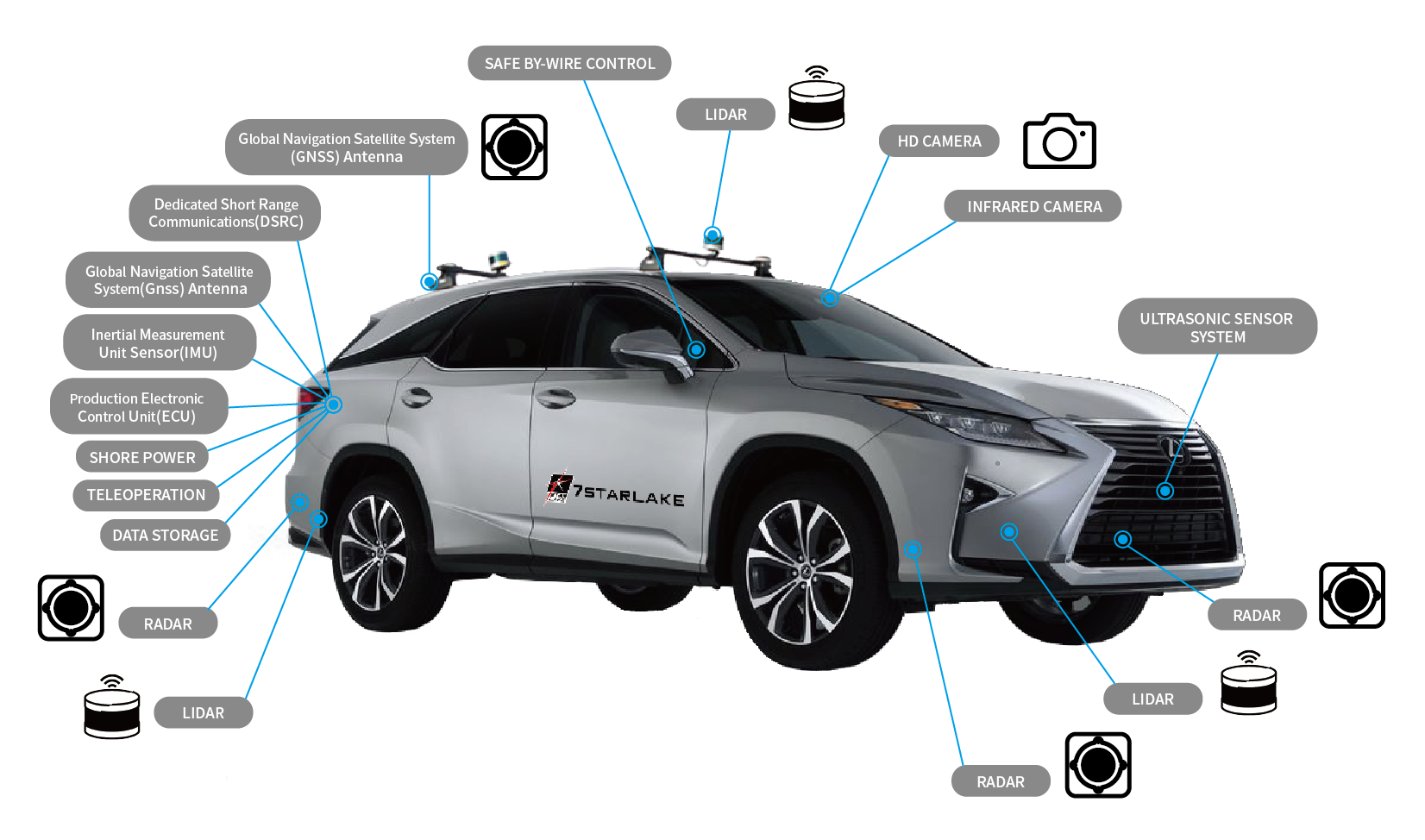

From the first day that driverless vehicles start boosting, lots of resulting problems have occurred. For example, safety problems have already raised public’s awareness since safety is always the priority for users. In light of these potential dangers, tracking objects, pedestrians, and other vehicles become the main concerns for driverless cars. Some related approaches such as high resolution map, path planning and simultaneous localisation and mapping have been applied to reduce the possibility of the security risk. Proper hardware have been utilised to support the approaches. Among all hardware, multi-sensor including computer vision, Radar, Lidar are three of the most familiar technologies to aid these approaches.

AI and ML Turn Collected Data into Vehicle Instructions

The massive amount of data which is collected from approaches mentioned above is requested for further purpose. Driverless vehicles training can employ the sorted data for future improvement and progressively enhance the road safety, and this occupies the most data. An instrumented vehicle can consume over 30TB of data per day while a fleet of 10 vehicles can generate 78PB of raw data. On the other hand, vehicle operation can benefit from the analysed data as well since the data can correct instructions for further development. During driving, data collection is still executing in the background; however, it is likely to be selective.

Normally, the data rate of a camera which is supported with full HD, RAW12 and 40fps is approximately 120MB/s while that of radar is close to 220 MB/s. To sum up, if a vehicle has a combination of 6 cameras and 6 radars, the complete vehicle RAW data will roughly be 2.040 KB/s, which is around 58TB in an 8 hour test drive shift.

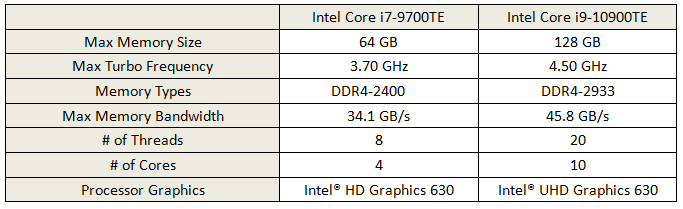

Comparison of HORUS 330 and HORUS 340

One of the main features of HORUS 340 is it being powered by Intel 10th Gen (Cometlake-S) i9-10900TE. Compared with Core 9th Gen. Coffee Lake, Comet Lake-S has up to 10 CPU cores. Also, hyperthreading is applied on almost all models except for Celeron. As for raising certain of the processors’ operating frequency, Comet Lake single core turbo boost is up to 5.3 GHz, which is 300 MHz higher than Coffee Lake-S; all-core turbo boost is up to 4.9 GHz; core i9 is supported by thermal velocity boost while Turbo Boost Max 3.0 supports for Core i7, i9. For the memory support, Core i7 and i9 is supported by DDR4-2933 whereas DDR4-2666 backs for Core i3, Pentium and Celeron.

Introduction

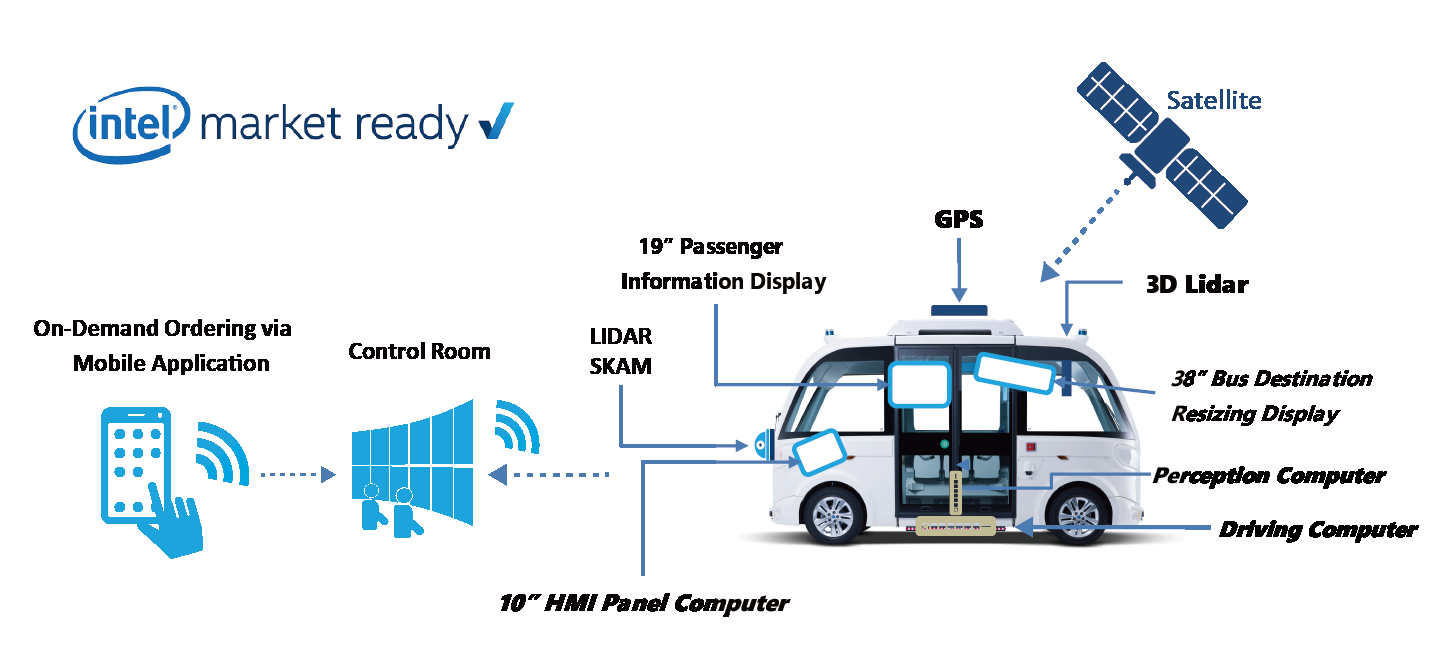

To achieve Artificial Intelligence Security in Traffic field, high performance GPUCPU structure is an essential element. HORUS340 plays a critical role in Sensor Fusion framework, which is fundamental of Traffic surveillance and management system. 3D LIDAR Enforcement, a gradually recognized and matured surveillance device, using LIDAR cameras technique to reach accurate and efficient traffic monitoring and detection. Moreover, the Sensor Fusion Capability makes HORUS340 can be widely used for different situations, UGV or Smart City.

How Autonomous Vehicle Works

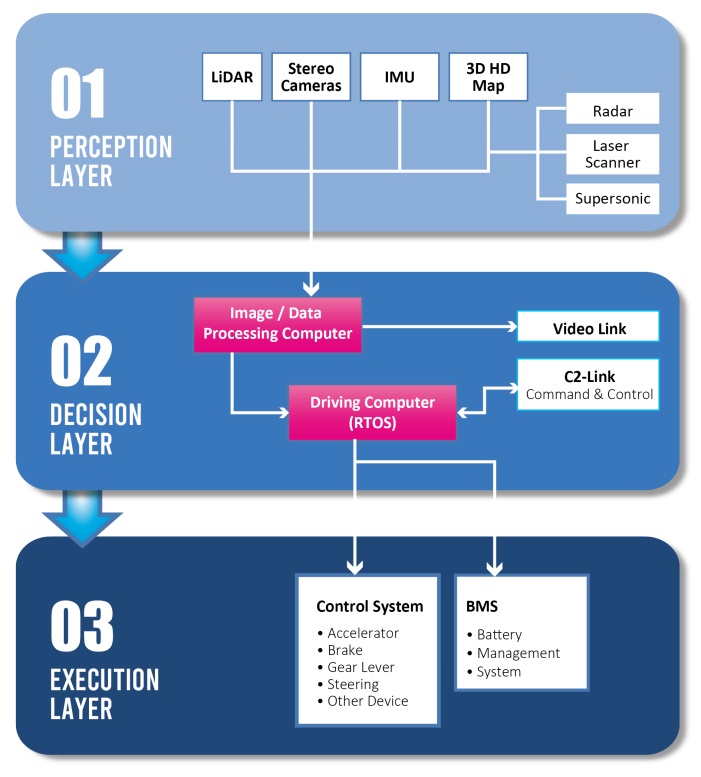

Sensors are key components to make a vehicle driverless. Camera, radar, ultrasonic and LiDAR enable an autonomous vehicle to visualize its surroundings and detect objects. Cars today are fitted with a growing number of environmental sensors that perform a multitude of tasks. The control system integrated sensors for AV encompasses three parts: perception, decision and execution.

01. PERCEPTION LAYER

Perception enables sensors to not only detect objects, but also acquire and eventually classify and track objects surround.

02. DECISION LAYER

Decision-taking is one of the most challenging tasks that AVs must perform. It encompasses prediction, path planning, and obstacle avoidance. All of them performed on the basis of previous perceptions.

03. EXECUTION LAYER

Execution layer consists of interconnection between accelerator, brakes, gearbox and so forth. Driven by Real-Time Operating System (RTOS), all these devices can carry out commands issued by Driving Computer.

Drawing

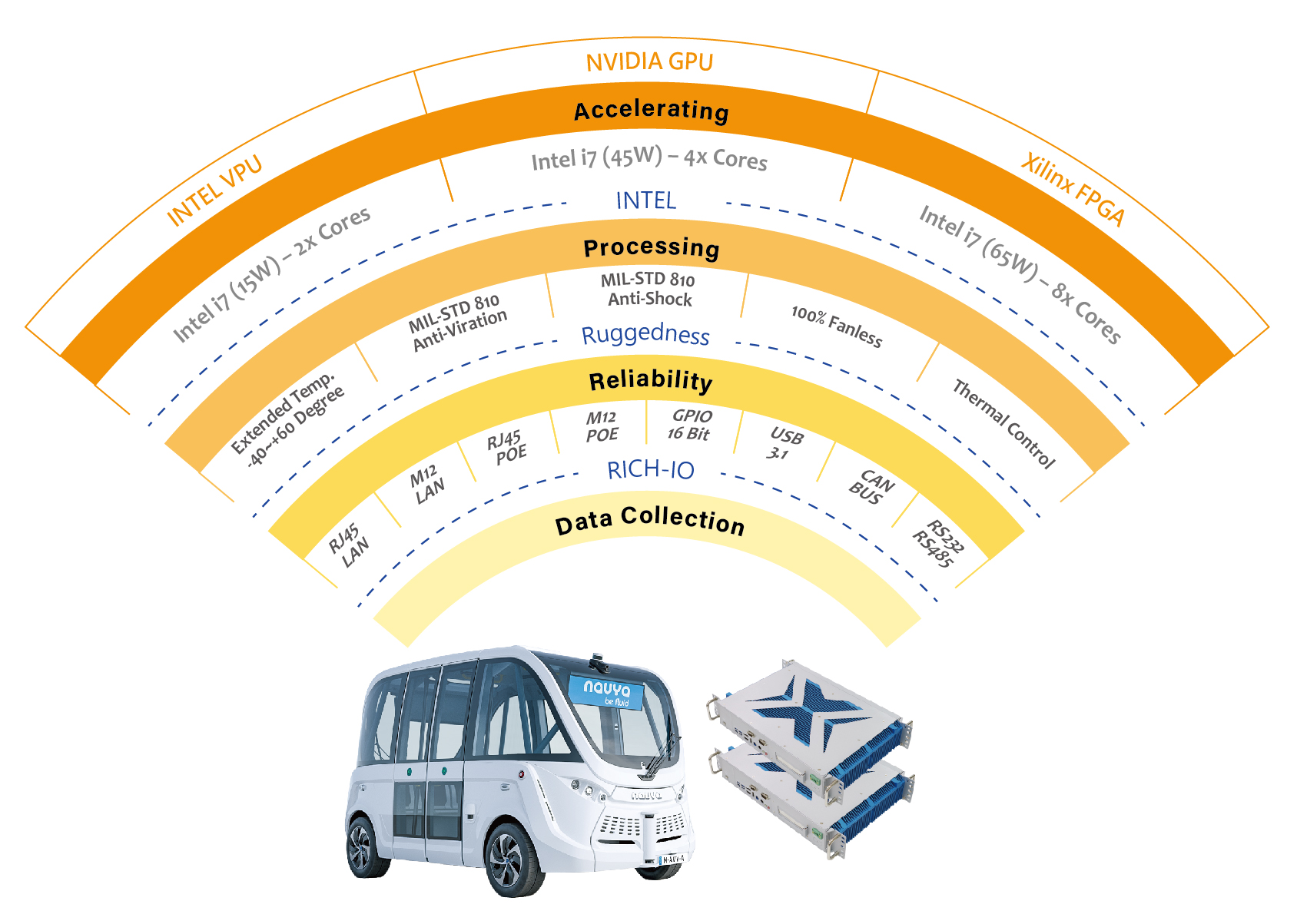

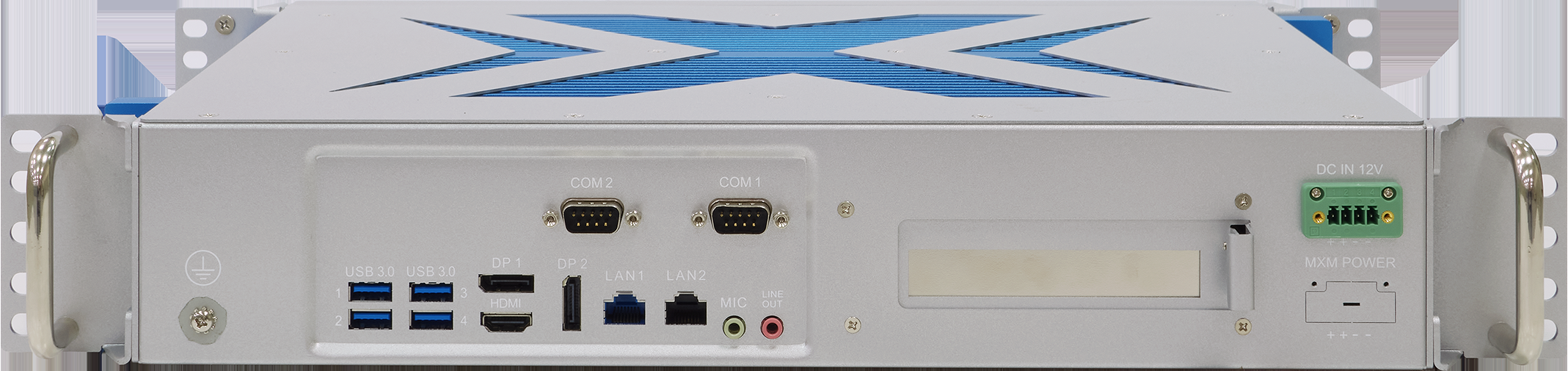

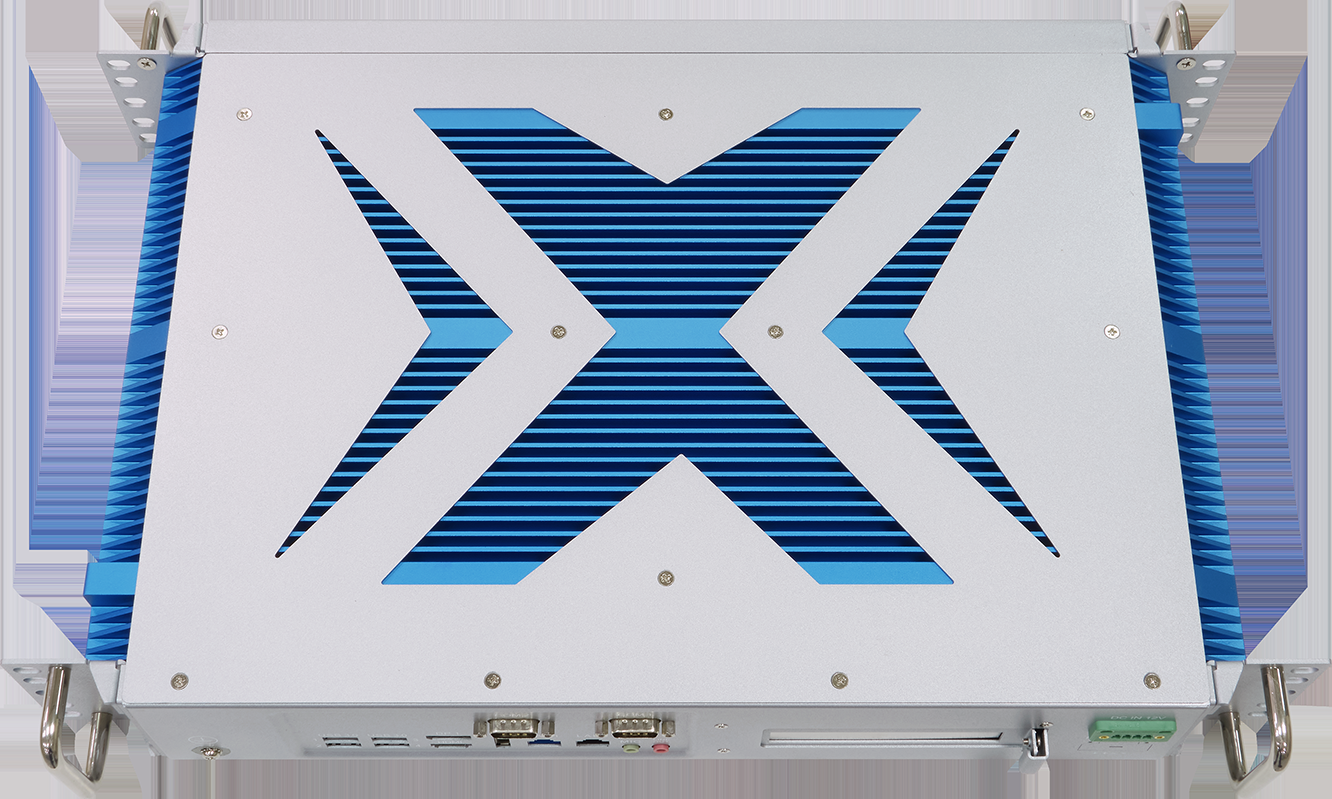

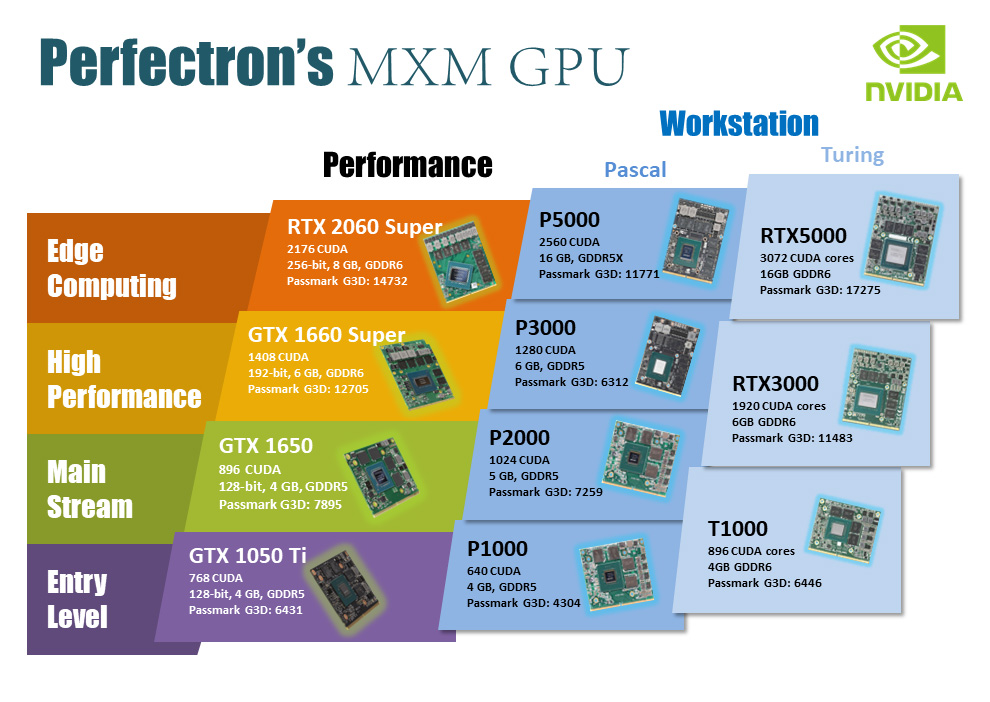

Required High Performance Computing PowerIn response to an exponential increase in the usage of the autonomous vehicles across the globe, Perfectron continuously develops suitable products for self-driving cars. Perfectron’s GPGPU AI Fusion computers provide complete structure for image processing and driving with remarkable durability for various unpredictable conditions and perfect adaptation for multi-usage. It can process variant vision sensor data synchronously, and offer a high-performance solution for automated driving that supports all relevant sensor interfaces, buses, and networks.

Depending on environmental condition and application, AV requires different facility composition and system organization. In recent innovating and examining process, AV is commonly used in three main fields: Load lifter, Shuttle bus, and Battle MUTT. To learn more details about the operation, please check out the highlight solutions below.